Machine learning algorithm based on black box speech recognition target confrontation samples

Recent research by Google Brain has shown that any machine learning classifier can be tricked into giving incorrect predictions. In automatic speech recognition (ASR) systems, deep recurrent networks have achieved some success, but many people have proved that small anti-interference can deceive deep neural networks. The current work on deceiving the ASR system mainly focuses on white box attacks. Alzantot et al. Proved that black box attacks using genetic algorithms are feasible.

In the following paper introduced by the machine learning team of the University of California, Berkeley, a new black box attack field is introduced, especially in deep nonlinear ASR systems that can output any length of conversion. The author proposes a black box attack method that combines genetic algorithm and gradient estimation, so that it can produce better adversarial samples than the single algorithm.

In research, improved genetic algorithms are applied to phrases and sentences; limiting noise to the high-frequency domain can improve the similarity of samples; and when the adversarial samples are close to the target, gradient estimation will be more effective than genetic algorithms. The trade-offs have opened new doors for future research.

The following is an excerpt from the paper:

Introduction to adversarial attacks

Because of the strong expressive ability of neural networks, they can be well adapted to various machine learning tasks, but on more than multiple network architectures and data sets, they are vulnerable to hostile attacks. These attacks will cause the network to misclassify the input by adding small disturbances to the original input, but human judgment will not be affected by these disturbances.

So far, compared to other fields, such as the field of speech systems, much work has been done to generate adversarial samples for image input. And from personalized voice assistants, such as Amazon ’s Alexa and Apple ’s Siri, to in-vehicle voice command technology, one of the main challenges faced by such systems is correctly judging what the user is saying and correctly interpreting those words ’intentions. These systems better understand users, but there is a potential problem with targeting the system against attacks.

In automatic speech recognition (ASR) systems, the application of deep loop networks in speech transcription has achieved impressive progress. Many people have shown that small countermeasures can deceive deep neural networks and make them mispredict a specific target. The current work on spoofing ASR systems is mainly focused on white box attacks, in which the model architecture and parameters are known.

Adversarial Attacks: The input form of machine learning algorithms is numeric vectors. By designing a special input to make the model output wrong results, this is called adversarial attacks. According to the attacker's knowledge of the network, there are different ways to perform hostile attacks:

White box attack: Fully understand the model and training set; if a network parameter is given, the white box attack is the most successful, such as Fast Grandient Sign Method and DeepFool;

Black box attack: No knowledge of the model, little or no knowledge of the training set; however, an attacker can access all parameters of the network, which is unrealistic in practice. In a black box setting, when an attacker can only access the logic or output of the network, it is difficult to consistently create a successful hostile attack. In some special black box settings, if the attacker creates a model that is an approximation or approximation of the target model, the white box attack method can be reused. Even if the attack can be transferred, more technology is needed to solve this task in some areas of the network.

Attack strategy:

Gradient-based method: FGSM fast gradient method;

Optimization-based method: use carefully designed original input to generate adversarial samples;

Past research

In previous research work, Cisse et al. Developed a general attack framework for working on various models including images and audio. Compared with images, audio provides a greater challenge for the model. Although the convolutional neural network can directly affect the pixel values ​​of the image, the ASR system usually requires a lot of preprocessing of the input audio.

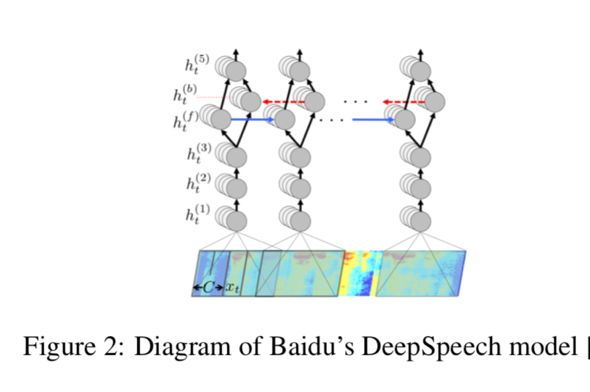

The most common is the Mel-Frequency Conversion (MFC), which is essentially the Fourier transform of the sampled audio file, which converts the audio into a spectogram showing the frequency change with time. The DeepSpeech model in the following figure uses spectogram as the initial input . When Cisse et al. Applied their method to audio samples, they encountered a roadblock that passed back through the MFC conversion layer. Carlini and Wagner overcame this challenge and developed a method for transferring gradients through the MFC layer.

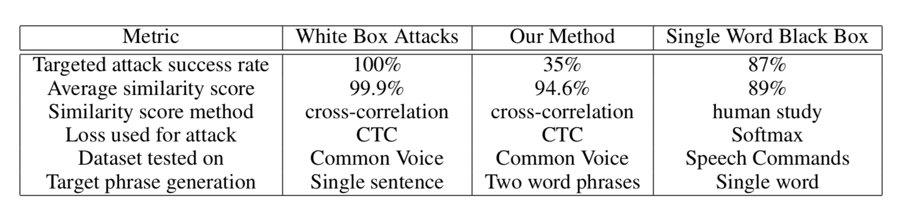

They applied the method to the Mozilla DeepSpeech model (the model is a complex, iterative, character-level network that decodes a translation of 50 characters per second). They achieved impressive results, generating more than 99.9% of the samples, similar to 100% of the target attack. Although the success of this attack opened a new door for the white box attack, in real life, the opponent usually does not know Model architecture or parameters. Alzantot et al. Proved that a target attack against an ASR system is possible. Using genetic algorithm methods, it is possible to apply noise to audio samples iteratively. This attack is carried out on a voice command classification model, which is lightweight Convolutional model, used to classify 50 different word phrases.

This article studies

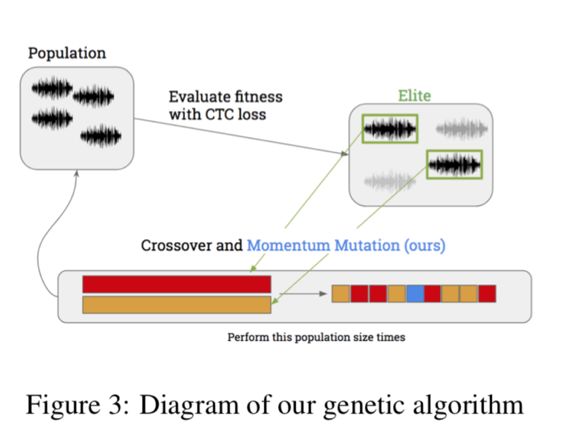

This paper uses a black box attack, combined with genetic algorithm and gradient estimation method to create targeted anti-audio to achieve deceptive ASR system. The first stage of the attack is carried out by genetic algorithm, which is an optimization method that does not need to calculate the gradient. Iterate over the candidate sample population until a suitable sample is generated. In order to limit excessive mutations and excess noise, we use momentum mutation updates to improve the standard genetic algorithm.

The second stage of the attack uses gradient estimation, because the gradient of a single audio point is estimated, so when the hostile sample is close to the target, more fine noise can be set. The combination of these two methods provides 94.6% audio file similarity and 89.25% target attack similarity after 3000 iterations. The difficulty with more complex deep speech systems is trying to apply the black box optimization to a deep layered, highly nonlinear decoder model. Nevertheless, the combination of two different approaches and momentum mutations has brought new success to this task.

Data and methods

Data set: The attacked data set obtained the first 100 audio samples from the Common Voice test set. For each, randomly generate a 2-word target phrase and apply our black box method to construct the first adversarial sample. The sample in each data set is a .wav file, which can be easily deserialized into a numpy array, thus Our algorithm works directly on numpy arrays to avoid the difficulty of handling problems.

Victim model: The model we attack is the Baidu deep voice model that is open sourced on Mozilla and implemented in Tensorflow. Although we can use the complete model, we still treat it as a black box attack, accessing only the output logic of the model. After performing MFC conversion, the model consists of 3 layers of convolution, followed by a bidirectional LSTM, and finally a fully connected layer.

Genetic algorithms: As mentioned earlier, Alzantot et al. Demonstrated the success of black box counterattacks on speech-to-text systems using standard genetic algorithms. The genetic algorithm with CTC loss is very effective for problems of this nature because it is completely independent of the gradient of the model.

Gradient estimation: When the target space is large, genetic algorithms can work well, and relatively more mutation directions may be beneficial. The advantage of these algorithms is that they can efficiently search a large number of spaces. However, when the decoded distance and target decoding are below a certain threshold, it is necessary to switch to the second stage. At this time, the gradient evaluation technique is more effective, and the countermeasure sample is already close to the target. The gradient evaluation technology comes from a 2017 NiTIn Bhagoji paper on black box attacks in the field of images.

Results and conclusions

Evaluation criteria: Two main methods are used to evaluate the performance of the algorithm; one is the accuracy of the accurate hostile audio samples being decoded to the desired target phrase; for this, we use the Levenshtein distance or the minimum character editing distance. The second is to determine the similarity between the original audio samples and the hostile audio samples.

Experimental results

In the audio samples from which we run the algorithm, a similarity of 89.25% is achieved between the final decoded phrase using Levenshtein distance and the target; the correlation between the final hostile sample and the original sample is 94.6%. After 3000 iterations, the average final Levenshtein distance is 2.3, 35% of the hostile samples completed accurate decoding in less than 3000 iterations, and 22% of the hostile samples completed accurate decoding in less than 1000 iterations.

The performance of the algorithm proposed in this paper is different from the data in the table. Running the algorithm in several iterations can produce a higher success rate. In fact, there is obviously a trade-off between the success rate and the similarity rate, so that you can pass Adjust the threshold to meet the different needs of attackers.

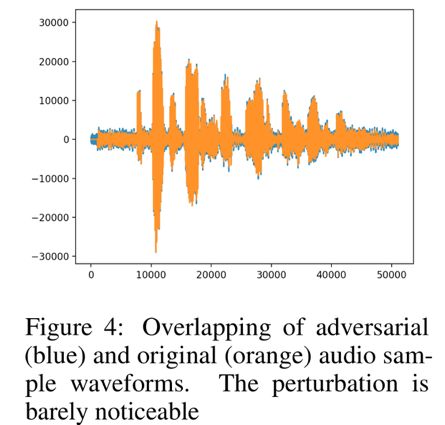

Compare the white box attack, black box attack single word (classification), and our proposed method: through the overlap of the two waveforms, you can see the similarity between the original audio sample and the adversarial sample, as shown in the following figure, 35 The% attack is a successful emphasis on the fact that the black box is not only deterministic but also very effective against the attack.

Experimental results

We have implemented black box confrontation in the process of combining genetic algorithm and gradient estimation, which can produce better samples than each algorithm alone.

Since the initial use of genetic algorithms, the transcription of most audio samples has been nearly perfect, while still maintaining a high degree of similarity. Although this is largely a conceptual verification, the research in this paper shows that the black box model using the direct method can achieve targeted confrontation attacks.

In addition, adding momentum mutations and adding noise at high frequencies improves the effectiveness of our method and emphasizes the advantages of combining genetic algorithms and gradient estimation. Limiting noise to the high frequency domain improves our similarity. By combining all these methods, we can achieve our highest results.

In summary, we have introduced the new field of black box attacks. Combining existing and novel methods can demonstrate the feasibility of our method and open new doors for future research.

Wuxi Lerin New Energy Technology Co.,Ltd. , https://www.lerin-tech.com