Deep analysis of the technology behind voice and video

With the rapid development of intelligent hardware devices and the improvement of network conditions, real-time voice and video applications are becoming more and more widely used, from interactive live broadcasts to casual games to strangers' social interactions, and how to ensure that the real-time interactive process is smooth and unresolved, how to eliminate echoes and Global network node deployment scheduling has become the key. Jiang Ningbo, the co-founder of the technology, used voice and video socialization as an example on LiveVideoStack Meet to deeply analyze real-time voice and video interaction technology.

Hello everyone, I am the co-founder of the technology, Jiang Ningbo, today's topic "In-depth analysis of real-time voice and video technology", I hope to share some technical points of real-time audio and video interaction. First, I introduced myself to Tencent from 2005 to 2015. I was responsible for the QQ Hummer partial reconstruction project in the early stage, and later responsible for Tencent QQ security work, including opening QQ security capabilities to other enterprises. In 2015, we jointly created the technology, which is a cloud service provider that provides real-time audio and video. It is committed to providing the world's most stable and highest quality real-time voice and video cloud services. The main products are aimed at multi-person real-time voice, multi-person real-time video, and Interactive live broadcast. Existing customers include Yingke, pepper, live broadcast, Himalayan FM, six rooms, cool dog live, freedom war 2 and good future.

Today, we mainly share four pieces of content with everyone. First of all, through two years of cooperation with a large number of real-time audio and video customers, we have seen some development trends in industry applications, followed by technical difficulties in real-time audio and video interaction, and the development of technology to solve these problems. The idea will eventually share how to choose a real-time voice video cloud service provider.

Industry Trends

In recent years, with the improvement of smart hardware devices such as mobile phones and network conditions, real-time audio and video has become more and more widely used. In entertainment, from interactive live broadcast to integrated audio and video SDK, casual games with social attributes. And then to the audio and video of strangers social applications. From the second half of the 15th year to the 16th year of the massive broadcast, basically, the first- and second-line live broadcast platforms are equipped with Lianmai live broadcasts, allowing multiple anchors to interact in real time; from the end of the 16th to the early 17th, the social properties of audio and video are integrated. Casual games are a sudden rise, the most typical is the werewolf kill, there are some chess games; now the most popular is the stranger video social application, like multi-people chat social products Houseparty and youth social network Monkey, etc., and with the United States These image processing technologies are becoming more and more mature, and more Internet aborigines such as the post-90s and 00s have integrated video socialization into their daily lives.

Real-time audio and video interaction difficulties

For a real-time interactive audio and video system, there are many technical difficulties. I extracted several important points from it: first, low latency, if you want to meet the smooth and real-time interaction, then one-way end-to-end delay It is generally less than 400 milliseconds to ensure smooth communication; the second is fluency, you can hardly imagine that there will be good interaction during the video process; the third is echo cancellation, the echo is generated by the speaker. The sound is re-acquired by the microphone and transmitted to the other party through the environmental reflection, so that the other party will always hear their own echo, the whole interactive process will be very uncomfortable; the fourth point is the intercommunication at home and abroad, with the domestic homogenization products becoming more and more Many, domestic competition is also extremely fierce, many manufacturers have chosen to go out to sea, then you need to do a good job at home and abroad; the fifth point is massive concurrency, of course, this does not only refer to real-time audio and video, basically for any Internet product It is a difficult point that must be considered.

Difficult solution

Next, I will focus on the above technical difficulties, and share with you the solution ideas and practical experience of our team.

Low latency

First of all, if the real-time audio and video should ensure low latency, then the entire chain of the front-end and the back-end must be done to the extreme, such as some encoding algorithms, flow control, and even frame loss, chasing frame strategy, etc. . In addition, in different service scenarios, the choice of the encoder will be different, which will bring different coding delays, so the delay degree that different service scenarios can achieve is also different.

Secondly, it is the choice of push-pull stream network. The usual solution is to let users who need real-time interaction to make voice and video transmission through core voice and video network-quality nodes like BGP. For some specific scenarios, such as interactive games. It will be broadcast live to some onlookers, so you need to do transcoding, transfer agreements, and even mixed traffic, and then distribute it through the content distribution network. Like the content distribution network itself, there is a natural proximity access, but for accessing the core voice and video network, an intelligent scheduling strategy is required to complete the proximity access, and cross-operator and inter-regional access, for example, the last time can be used. Log in to IP, common IP, and regional scheduling. You can even connect to the speed and then connect. Of course, the network scheduling policy needs to be carefully planned according to the distribution of service groups, and even multiple policies are used to configure weights.

Fluency

There are also a lot of technical difficulties and strategies to achieve fluency. I will mainly introduce some of them. The first one is a JitterBuffer that can be dynamically scaled. In the case of poor network or severe network jitter, you can increase the JitterBuffer appropriately, thus reducing a little delay against jitter.

The second one is fast broadcast and slow broadcast technology. In a poor network environment, the playback speed can be slightly reduced under the condition that the user has no perception, to deal with the immediate jam caused by the short-term network jitter, and the network recovery can speed up the recovery. However, this method is not suitable for all scenes. For example, a singing scene with very accurate rhythm requirements can be perceived when the playback speed is slightly slowed down.

The third is code rate adaptation, which is to perform dynamic transmission at a suitable code rate. In order to ensure smoothness, the frame rate and resolution can be adjusted. The voice and video engine dynamically adjusts the bit rate, frame rate, and resolution based on the results of the current network speed measurement and the expected code rate of the application, ultimately achieving a smooth viewing user experience.

The fourth is layered coding, transmission control, and some layered coding on the push stream, so that the pull stream can dynamically pull different data according to the detected network bandwidth to perform rendering. Hierarchical coding allows the streaming end to select different levels of video encoding data. When the network is in good condition, it draws more levels of data; when the network is in poor condition, it draws the basic level of data.

The fifth is dynamic scheduling. When the current push-pull stream is monitored for poor quality in the push-pull stream, and even if the quality cannot be guaranteed by reducing the rate, frame rate and resolution, then the link can be abandoned. Directly re-select, establish a connection, of course, there may be a brief pause in the process.

Echo cancellation

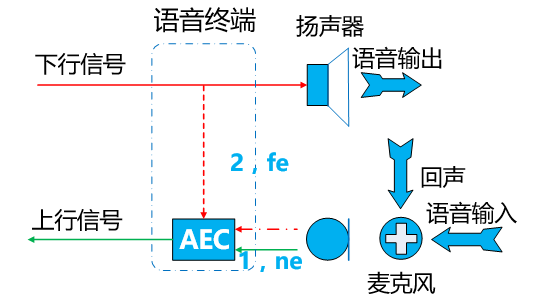

Firstly, the principle of echo cancellation is introduced: the signal sent by the opposite end will be given to the module of echo cancellation first, as the reference signal to be eliminated in the future, and then the signal is sent to the speaker for playback. After the speaker is played, the echo is formed due to the reflection of the surrounding environment, and the real The audio input is collected by the microphone together. At this time, the input signal collected is echoed, and the echo cancellation module generates a filter according to the previous reference signal to cancel the echo cancellation and then send it out.

The principle sounds simpler, but there are many difficulties in the actual process. For example, the echo signal received by the echo cancellation module is different from the echo that is finally reflected by the environment. In addition, the device will greatly affect the echo. Eliminate, especially the domestic Android models are particularly large, such as a domestic mobile phone manufacturer, from the microphone to collect audio data to submit a delay of nearly one hundred milliseconds, then how to adapt the echo cancellation algorithm to such a long echo delay is critical For example, many users will use external sound cards or even simulators in the live broadcast, which will inevitably bring echo delay. In addition to the equipment, the venue also has a great correlation. For ordinary conference rooms, setting a delay of 40 meters may be enough, but some echoes of the conference hall can reach nearly 100 meters, which is also a challenge.

About echo cancellation, in fact, Google Open Source WebRTC provides echo cancellation module, but WebRTC design itself is for real-time audio and video interaction on the PC side, the adaptability of the mobile side will be worse, especially reflected in some low Android On the terminal. Relatively speaking, because Apple's overall hardware and software are all realized by itself, microphones and speakers also have acoustic model design, so the effect of echo cancellation is much better than Android. That is to say, the audio and video engines of the technology are all self-developed, and have been tested on more than 1,000 models such as real machines and simulators, and can achieve good echo cancellation.

Interoperability at home and abroad

As mentioned above, many products will choose to go out to sea. The products that focus on the domestic market will also have some overseas users. Therefore, streaming media data and control signaling must be interoperable internationally. This requires consideration of rationally arranging some relay nodes around the world. .

This picture is a typical medium-to-continue transmission. Video communication between Beijing users and Dubai users will be connected to local nodes according to the nearest access principle. If these two nodes are mutually pulled, the effect will be very poor. At this time, it is necessary to arrange suitable relay nodes, such as Hong Kong, Singapore, Japan, etc., the selection of the data path needs to be determined according to the service side, that is to say, there is another service on the physical link route. The routing table needs to be formulated according to the user scenario, including user distribution, user access frequency, high frequency band peaks, etc., and the route may be different each time.

Massive concurrency

Massive concurrency is a problem that all Internet products will encounter. It will not be developed here. It is mainly to consider load balancing, how to smoothly expand capacity, to do proxy scheduling for areas that cannot be covered, and even to consider disaster recovery, access layer design, etc. Wait.

How to choose a real-time voice video cloud service provider

The technical threshold for real-time voice and video is relatively high. If you rely on your own research and development, you may not be able to match the rapid development of the market even if you invest a lot of development costs. Now the real-time audio and video cloud service is very mature. In fact, it may be a good idea to "let professional people do professional things." The choice of real-time voice video cloud service providers can also be roughly classified into several points:

The first point is definitely that the technology is excellent. Test product quality is a good selection method, including lag time, echo cancellation effect and multi-model compatibility.

The second point is whether it has been verified on a large scale by top vendors. Many systems look good and stable on small scales, but there are a variety of problems that can occur when large-scale concurrently occurs in a real-world environment. Therefore, the product chosen must be able to support large-scale concurrency in a real complex network.

Third, the system interface should be simple and flexible enough to ensure rapid integration of vendors with basic functions, as well as access to high-end customer customization capabilities. Each part of the process from acquisition, pre-processing, encoding, push, pull, decode, and render is completely decoupled so that third-party or even the customer's own technology modules can be integrated.

The fourth point is that the technical service is good enough, the response to the demand is fast enough, and the perfect operational support system can quickly locate the problem. Therefore, it is very important to monitor the alarm and log report collection.

Figure: Second-level raw data

The handheld addresser is used to program the address of the monitoring module offline. When in use, connect the two output wires of the handheld encoder to the communication bus terminal (terminal label 1, 2) of the monitoring module, turn on the black power switch on the right side upwards, and press "ten Add", [Subtract ten", [Add one place" and [Subtract one place" to program the address.

Motion Sensor Photocell Light Control,Sensor For Motion Control,Exterior Motion Sensor Control,Hollow Shaft Encoder

Changchun Guangxing Sensing Technology Co.LTD , https://www.gx-encoder.com