In-depth analysis of industrial-grade WebRTC application practice

NetEase has more than 10 years of rich experience in the audio and video field. Within the company, we call our industrial-grade audio and video technology solution with complete functions as NRTC, which means NetEase RTC. In recent years, WebRTC has been very hot, especially in 2017, when Apple announced that it supports WebRTC in Safari 11. Therefore, the Web itself has become a very important entrance and a very important terminal for audio and video. For us, To realize the support for WebRTC in our NRTC, that is, to be able to support a terminal and entrance like the Web.

1. NRTC technical solutions

The full name of NRTC is NetEase RTC, which is a set of industrial-grade audio and video technology solutions with complete functions implemented by NetEase.

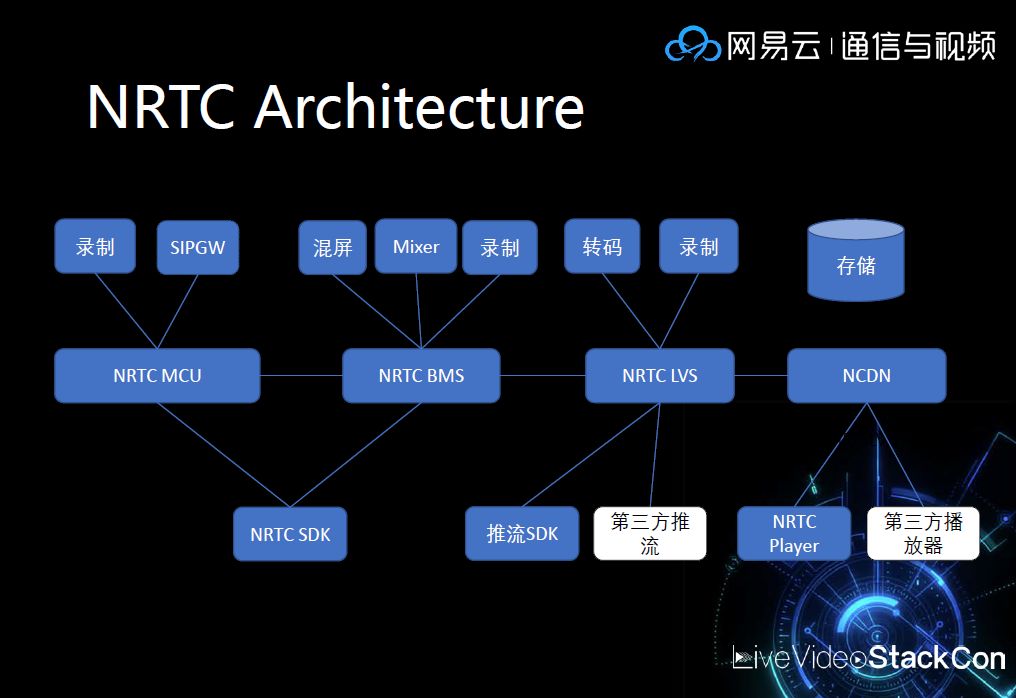

1.1 NRTC technical architecture diagram:

From the architecture diagram, you can see that we have the NRTC SDK, which is the client SDK for real-time audio and video calls, the PC and mobile SDKs, and our NRTC MCU, which is a media server. On the client, the NRTC SDK will push and pull the stream to the NRTC MCU, and the NRTC MCU will transfer the media stream to other clients. At the same time, it will also transfer it to the NRTC BMS. The BMS is actually an interactive live broadcast server, and the BMS will do mixing. Screen mixing, mixing audio and video into one stream and then pushing it to NRTC LVS. LVS is the live source station, and finally to our NCDN network. Through the mass distribution of NCDN, using our NRTC Player can support massive users to pull the stream. . Here you can see that the left half is a UDP solution, and the right half is a TCP solution. At the same time, we have a lot of things like recording, mixing, mixing, transcoding, including storage, and storage-based follow-up On demand.

1.2 Functions supported by NRTC:

Real-time audio and video calls

Live broadcast

Interactive live broadcast

On demand

Interactive whiteboard

Short video

1.3 Audio and video technology stack

Signaling: SDP, JSEP, SIP, Jingle, ROAP

Transmission: RTP, RTCP, DTLS, RTMP, FLV, HLS

P2P: ICE, STUN, TURN, NAT

Network: UDP, TCP

Audio: Opus, G711, AAC, Speex, 3A

Video: H264, VP8

QoS: FEC, NACK, BWE

Server: SFU, MCU

End: Capture, Render, various adaptations

2. How to understand WebRTC?

WebRTC++ is a specification defined by W3C and IETF. Simply put, it is a framework (JavaScript API) to implement audio and video sessions in a browser. It does not need to be installed and can meet P2P transmission. As long as they are negotiated through signaling, they can also be interconnected with traditional audio and video applications. In addition, WebRTC is also an open source project. It is a C++-based cross-platform open source audio and video framework provided by Google. It is an audio and video SDK with complete functions. This open source project is generally represented by libwebrtc.

2.1 Architecture of WebRTC

In this simple architecture, it mainly includes network transmission, audio engine, and video engine. Its main functions and content are actually implemented in C++, and then a layer of JavaScript API is encapsulated so that you can call these functions with JavaScript .

2.2 Features and limitations of WebRTC

Called on the browser via JavaScript API

No signaling defined

Client-based, no SFU/MCU

Based entirely on standards

Rely on browser to achieve

2.3 How to use WebRTC

1) Method 1: Application of audio and video based on JavaScript API

Completely based on JavaScript, without media-related Server, reliability or functions will be very limited, but the cost can be controlled very low.

2) Method 2: Implementation based on libwebrtc

Because the C++ Code of WebRTC itself is not well-engineered, it has not done enough in exception protection and error recovery. In real applications, many adjustments and modifications may be needed.

3) Method 3: Compatible and support WebRTC

For some companies with mature audio and video framework systems, they can be compatible and support WebRTC on their own systems.

2.4 Comparison of NRTC and WebRTC

NRTC predates WebRTC

NRTC is a complete solution for VoIP. It can be said that NRTC SDK is approximately equal to WebRTC

The implementation of NRTC is more flexible. WebRTC is based on standards and has many limitations.

NRTC is an industrial-grade realization, with a more mature technical framework

3. How to implement NRTC to support WebRTC

3.1 The principle of connecting WebRTC in NRTC

It can be seen from the brief architecture design in the figure that if you want to establish a connection between the NRTC technical solution and the Web end, you can use the WebRTC Gateway method. The NPDU streaming media is transmitted between WebRTC GateWay and the NRTC MCU through the UDP protocol. One end connects to the Web through SRTP.

Let me explain to you WebRTC GateWay:

In WebRTC GateWay, it mainly includes two parts: signaling and media. In terms of signaling, we mainly provide WebSocket. The signaling is to help the two end SDP and ICE to interact, and the provided WebSocket is used to connect; in terms of media In order to realize the ICE framework and the SRTP protocol stack to establish the connection of network communication, a packet trans-encapsulation work is also required to convert RTP packets and NPDU packets. With this WebRTC GateWay, we can communicate with our other terminals through our MCU.

3.2 Work done to achieve NRTC compatibility with WebRTC

Achieve browser compatibility

Build the ICE framework

Build RCTP protocol stack and get feedback value

Ensure reliable connection on the web

Congestion control

3.3 The "pits" of the browser

1) Use adapter.js to achieve browser compatibility

When different versions of browsers implement this specification, the interfaces may be somewhat different, mainly due to the difference in the interface layer, which can be compatible with these interfaces through adapter.js.

2) Video resolution

Some browsers support video resolution cropping, some do not.

3) The life cycle of the media stream

The life cycle of the media stream on the browser is limited, and sometimes the media obtained is without video or audio.

4) The request for user media was successful, but no media stream was sent.

3.4 Lite ICE framework

The ICE framework includes NAT, STUN-RFC5389, TURN-RFC5766, ICE-RFC5245, TCP. In a highly reliable network connection, it must be able to support TCP connections. When one party is Serve and has a fixed public IP, and the other party is a client, the Lite ICE framework can be used. In the case of Lite ICE, you only need to give a host candidate, that is, when your server comes back, give the server a public IP, no need to go to other detection, you only need to give the host candidate of the server, in Lite In the case of ICE, there is a full peer that initiates a connectivity check, that is, the browser initiates a connectivity check. It only takes two steps to complete the connectivity check.

3.5 Network monitoring

1) In the signaling, WebSocket has a network disconnection event notification

2) RTCPeerConnection has a network disconnection event notification

3) On TCP connection, there is keepalive based on signaling channel

3.6 Disconnect and reconnect

1) Start over

Detach stream, destroy existing connections, etc.

Signaling connection, authentication, media connection

2) ICE restart

3.7 Multiplexing and bundle

Reduce the number of UDP connections

There are two advantages to reducing UDP connections. First, it can reduce the time to establish a connection. Second, in the corporate environment, many UDP ports need to be configured by the network manager. If there are multiple connections, the configuration will be increased. And the difficulty of maintenance.

3.8 Lost packet recovery and congestion control

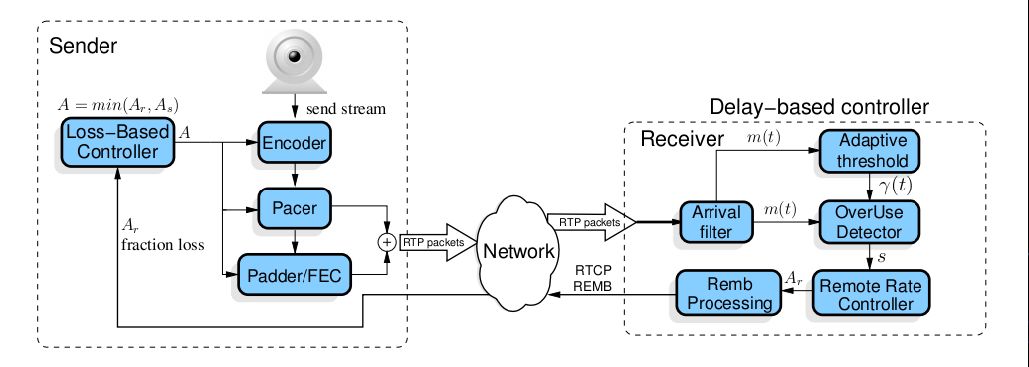

1) GCC

GCC is an existing set of congestion control framework in WebRTC itself. It has two models, one is based on packet loss, and the other is based on delay. As can be seen from the figure, there is a The model called packet loss has a delay-based model at the receiving end (it has been adjusted to be at the sending end in the latest WebRTC); at the sending end, it will perform bandwidth evaluation, and the streaming media will be sent to the receiving end after the evaluation and management. , The receiving end has a bandwidth evaluation based on delay. After the evaluation, when it finds that the bandwidth is limited, or it needs to adjust the bit rate, it sends the message to the sender through REMB, and the sender can readjust Bit rate, so as to achieve a bandwidth evaluation and adaptive bit rate process.

2) How to make GCC work in WebRTC GateWay

REMB

First perform an estimation of the maximum received bit rate at the receiving end, and use the REMB message on the WebRTC Gateway to tell the sending end how to adjust the code rate and bandwidth.

GCC feedbacks

By feeding back the correct Transport cc to the Delay-based controller to allow it to calculate the correct delay estimation and bandwidth estimation; by feeding back the two RTCP packets (SR/RR) of the Loss-based controller to calculate the packet loss.

3) Lost packet retransmission (NACK)

A two-way packet loss retransmission is realized, and the packet loss retransmission is performed by sending a NACK RTCP feedback message between the WebRTC GateWay and the browser.

3.9 Sharing an SDP example

Hf Driver,Compression Driver,Titanium Diaphragm Driver,Horn Drivers

Guangzhou BMY Electronic Limited company , https://www.bmy-speakers.com